MLE-STAR (Machine Learning Engineering via Search and Targeted Refinement) is a state-of-the-art agent system developed by Google Cloud researchers to automate complex machine learning ML pipeline design and optimization. By leveraging web-scale search, targeted code refinement, and robust checking modules, MLE-STAR achieves unparalleled performance on a range of machine learning engineering tasks—significantly outperforming previous autonomous ML agents and even human baseline methods.

The Problem: Automating Machine Learning Engineering

While large language models (LLMs) have made inroads into code generation and workflow automation, existing ML engineering agents struggle with:

- Overreliance on LLM memory: Tending to default to “familiar” models (e.g., using only scikit-learn for tabular data), overlooking cutting-edge, task-specific approaches.

- Coarse “all-at-once” iteration: Previous agents modify whole scripts in one shot, lacking deep, targeted exploration of pipeline components like feature engineering, data preprocessing, or model ensembling.

- Poor error and leakage handling: Generated code is prone to bugs, data leakage, or omission of provided data files.

MLE-STAR: Core Innovations

MLE-STAR introduces several key advances over prior solutions:

1. Web Search–Guided Model Selection

Instead of drawing solely from its internal “training,” MLE-STAR uses external search to retrieve state-of-the-art models and code snippets relevant to the provided task and dataset. It anchors the initial solution in current best practices, not just what LLMs “remember”.

2. Nested, Targeted Code Refinement

MLE-STAR improves its solutions via a two-loop refinement process:

- Outer Loop (Ablation-driven): Runs ablation studies on the evolving code to identify which pipeline component (data prep, model, feature engineering, etc.) most impacts performance.

- Inner Loop (Focused Exploration): Iteratively generates and tests variations for just that component, using structured feedback.

This enables deep, component-wise exploration—e.g., extensively testing ways to extract and encode categorical features rather than blindly changing everything at once.

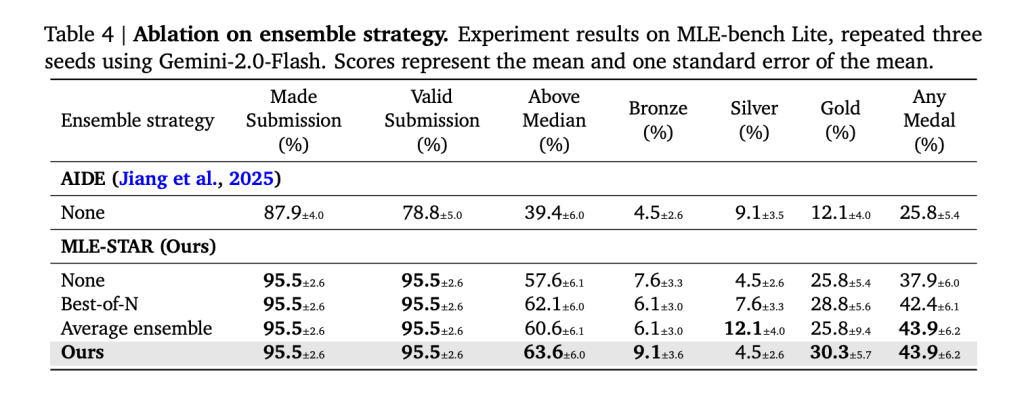

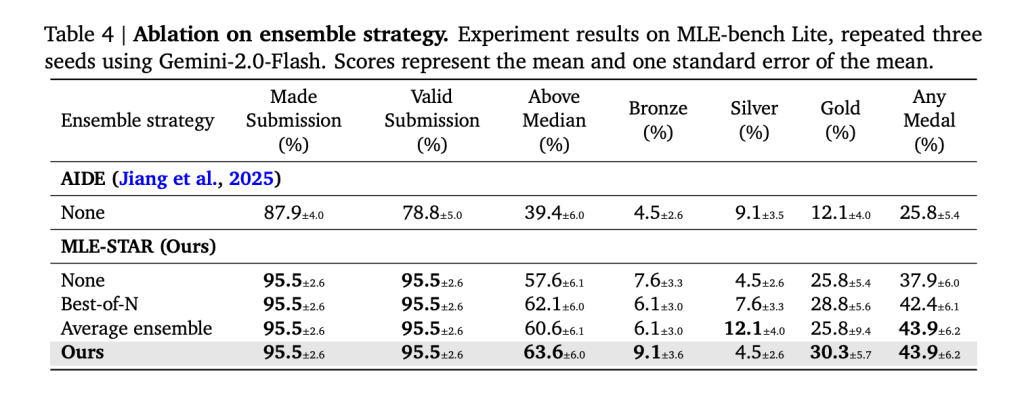

3. Self-Improving Ensembling Strategy

MLE-STAR proposes, implements, and refines novel ensemble methods by combining multiple candidate solutions. Rather than just “best-of-N” voting or simple averages, it uses its planning abilities to explore advanced strategies (e.g., stacking with bespoke meta-learners or optimized weight search).

4. Robustness through Specialized Agents

- Debugging Agent: Automatically catches and corrects Python errors (tracebacks) until the script runs or maximum attempts are reached.

- Data Leakage Checker: Inspects code to prevent information from test or validation samples biasing the training process.

- Data Usage Checker: Ensures the solution script maximizes the use of all provided data files and relevant modalities, improving model performance and generalizability.

Quantitative Results: Outperforming the Field

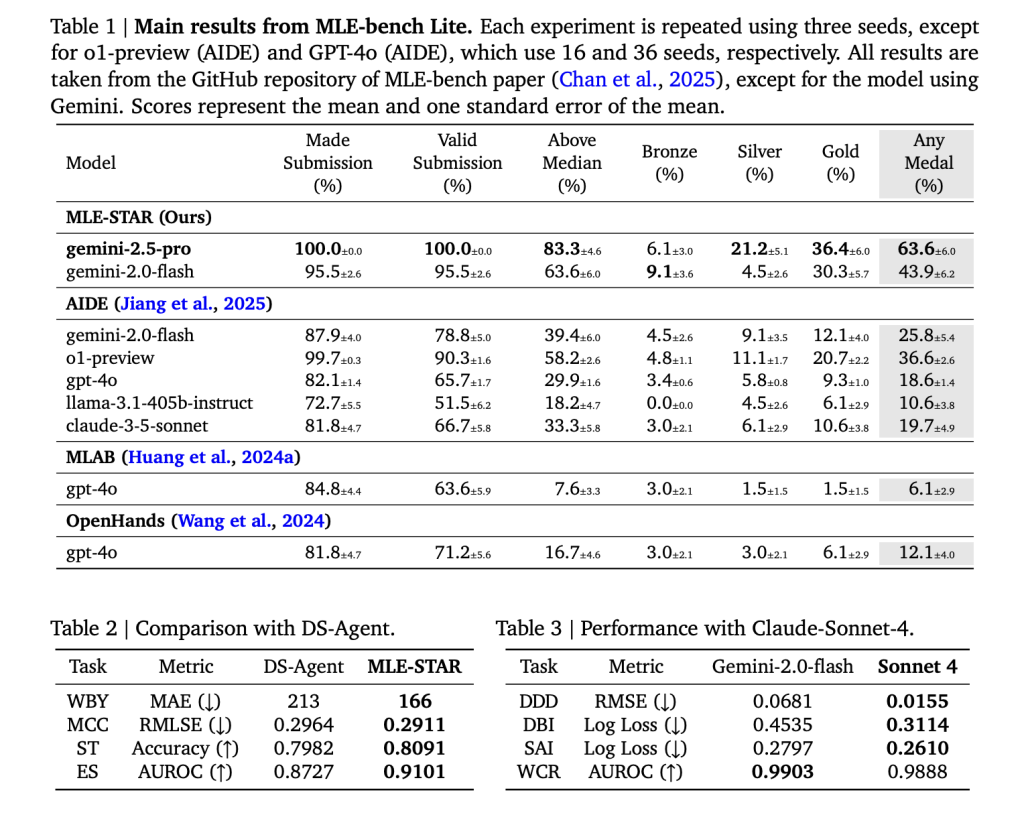

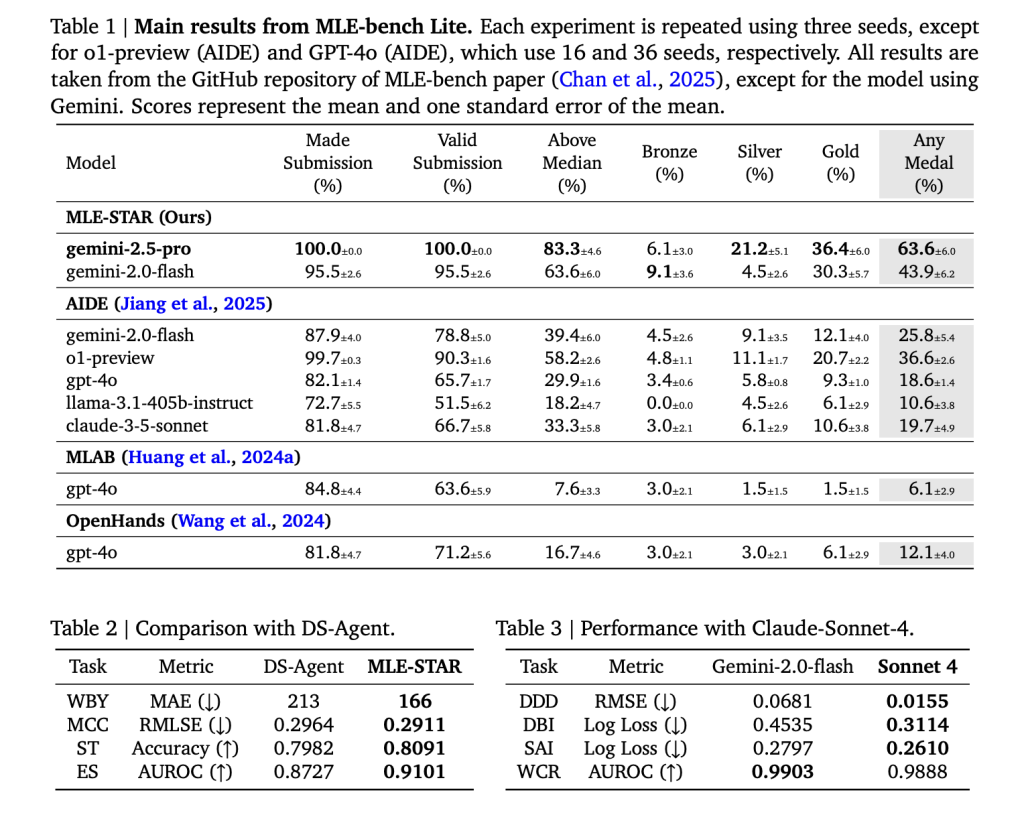

MLE-STAR’s effectiveness is rigorously validated on the MLE-Bench-Lite benchmark (22 challenging Kaggle competitions spanning tabular, image, audio, and text tasks):

| Metric | MLE-STAR (Gemini-2.5-Pro) | AIDE (Best Baseline) |

|---|---|---|

| Any Medal Rate | 63.6% | 25.8% |

| Gold Medal Rate | 36.4% | 12.1% |

| Above Median | 83.3% | 39.4% |

| Valid Submission | 100% | 78.8% |

- MLE-STAR achieves more than double the rate of “medal” (top-tier) solutions compared to previous best agents.

- On image tasks, MLE-STAR overwhelmingly chooses modern architectures (EfficientNet, ViT), leaving older standbys like ResNet behind, directly translating to higher podium rates.

- The ensemble strategy alone contributes a further boost, not just picking but combining winning solutions.

Technical Insights: Why MLE-STAR Wins

- Search as Foundation: By pulling example code and model cards from the web at run time, MLE-STAR stays far more up to date—automatically including new model types in its initial proposals.

- Ablation-Guided Focus: Systematically measuring the contribution of each code segment allows “surgical” improvements—first on the most impactful pieces (e.g., targeted feature encodings, advanced model-specific preprocessing).

- Adaptive Ensembling: The ensemble agent doesn’t just average; it intelligently tests stacking, regression meta-learners, optimal weighting, and more.

- Rigorous Safety Checks: Error correction, data leakage prevention, and full data usage unlock much higher validation and test scores, avoiding pitfalls that trip up vanilla LLM code generation.

Extensibility and Human-in-the-loop

MLE-STAR is also extensible:

- Human experts can inject cutting-edge model descriptions for faster adoption of the latest architectures.

- The system is built atop Google’s Agent Development Kit (ADK), facilitating open-source adoption and integration into broader agent ecosystems, as shown in the official samples.

Conclusion

MLE-STAR represents a true leap in the automation of machine learning engineering. By enforcing a workflow that begins with search, tests code via ablation-driven loops, blends solutions with adaptive ensembling, and polices code outputs with specialized agents, it outperforms prior art and even many human competitors. Its open-source codebase means that researchers and ML practitioners can now integrate and extend these state-of-the-art capabilities in their own projects, accelerating both productivity and innovation.

Check out the Paper, GitHub Page and Technical details. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Leave a comment