Estimated reading time: 4 minutes

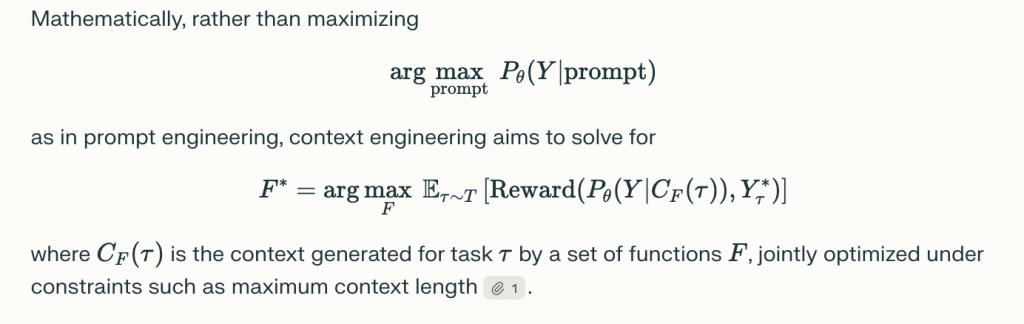

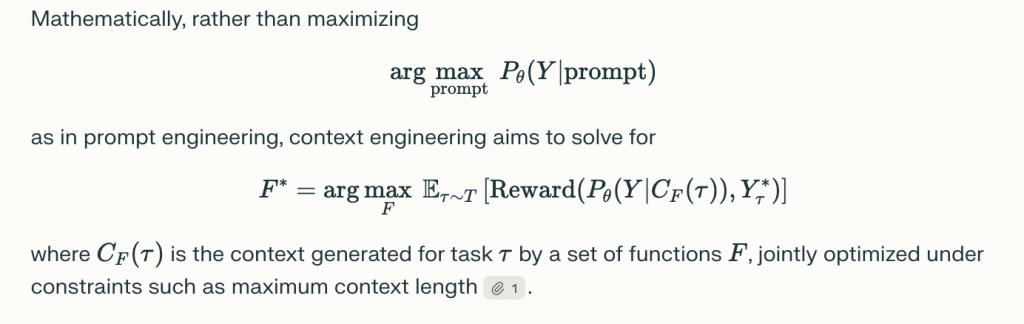

The paper “A Survey of Context Engineering for Large Language Models” establishes Context Engineering as a formal discipline that goes far beyond prompt engineering, providing a unified, systematic framework for designing, optimizing, and managing the information that guides Large Language Models (LLMs). Here’s an overview of its main contributions and framework:

What Is Context Engineering?

Context Engineering is defined as the science and engineering of organizing, assembling, and optimizing all forms of context fed into LLMs to maximize performance across comprehension, reasoning, adaptability, and real-world application. Rather than viewing context as a static string (the premise of prompt engineering), context engineering treats it as a dynamic, structured assembly of components—each sourced, selected, and organized through explicit functions, often under tight resource and architectural constraints.

Taxonomy of Context Engineering

The paper breaks down context engineering into:

1. Foundational Components

a. Context Retrieval and Generation

- Encompasses prompt engineering, in-context learning (zero/few-shot, chain-of-thought, tree-of-thought, graph-of-thought), external knowledge retrieval (e.g., Retrieval-Augmented Generation, knowledge graphs), and dynamic assembly of context elements1.

- Techniques like CLEAR Framework, dynamic template assembly, and modular retrieval architectures are highlighted.

b. Context Processing

- Addresses long-sequence processing (with architectures like Mamba, LongNet, FlashAttention), context self-refinement (iterative feedback, self-evaluation), and integration of multimodal and structured information (vision, audio, graphs, tables).

- Strategies include attention sparsity, memory compression, and in-context learning meta-optimization.

c. Context Management

- Involves memory hierarchies and storage architectures (short-term context windows, long-term memory, external databases), memory paging, context compression (autoencoders, recurrent compression), and scalable management over multi-turn or multi-agent settings.

2. System Implementations

a. Retrieval-Augmented Generation (RAG)

- Modular, agentic, and graph-enhanced RAG architectures integrate external knowledge and support dynamic, sometimes multi-agent retrieval pipelines.

- Enables both real-time knowledge updates and complex reasoning over structured databases/graphs.

b. Memory Systems

- Implement persistent and hierarchical storage, enabling longitudinal learning and knowledge recall for agents (e.g., MemGPT, MemoryBank, external vector databases).

- Key for extended, multi-turn dialogs, personalized assistants, and simulation agents.

c. Tool-Integrated Reasoning

- LLMs use external tools (APIs, search engines, code execution) via function calling or environment interaction, combining language reasoning with world-acting abilities.

- Enables new domains (math, programming, web interaction, scientific research).

d. Multi-Agent Systems

- Coordination among multiple LLMs (agents) via standardized protocols, orchestrators, and context sharing—essential for complex, collaborative problem-solving and distributed AI applications.

Key Insights and Research Gaps

- Comprehension–Generation Asymmetry: LLMs, with advanced context engineering, can comprehend very sophisticated, multi-faceted contexts but still struggle to generate outputs matching that complexity or length.

- Integration and Modularity: Best performance comes from modular architectures combining multiple techniques (retrieval, memory, tool use).

- Evaluation Limitations: Current evaluation metrics/benchmarks (like BLEU, ROUGE) often fail to capture the compositional, multi-step, and collaborative behaviors enabled by advanced context engineering. New benchmarks and dynamic, holistic evaluation paradigms are needed.

- Open Research Questions: Theoretical foundations, efficient scaling (especially computationally), cross-modal and structured context integration, real-world deployment, safety, alignment, and ethical concerns remain open research challenges.

Applications and Impact

Context engineering supports robust, domain-adaptive AI across:

- Long-document/question answering

- Personalized digital assistants and memory-augmented agents

- Scientific, medical, and technical problem-solving

- Multi-agent collaboration in business, education, and research

Future Directions

- Unified Theory: Developing mathematical and information-theoretic frameworks.

- Scaling & Efficiency: Innovations in attention mechanisms and memory management.

- Multi-Modal Integration: Seamless coordination of text, vision, audio, and structured data.

- Robust, Safe, and Ethical Deployment: Ensuring reliability, transparency, and fairness in real-world systems.

In summary: Context Engineering is emerging as the pivotal discipline for guiding the next generation of LLM-based intelligent systems, shifting the focus from creative prompt writing to the rigorous science of information optimization, system design, and context-driven AI.

Check out the Paper. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Leave a comment