Existing long-CoT reasoning models have achieved state-of-the-art performance in mathematical reasoning by generating reasoning trajectories with iterative self-verification and refinement. However, open-source long-CoT models depend only on natural language reasoning traces, making them computationally expensive and prone to errors without verification mechanisms. Although tool-aided reasoning provides greater efficiency and reliability for large-scale numerical computations through frameworks like OpenHands that integrate code interpreters, these agentic approaches struggle with abstract or conceptually complex reasoning problems.

DualDistill Framework and Agentic-R1 Model

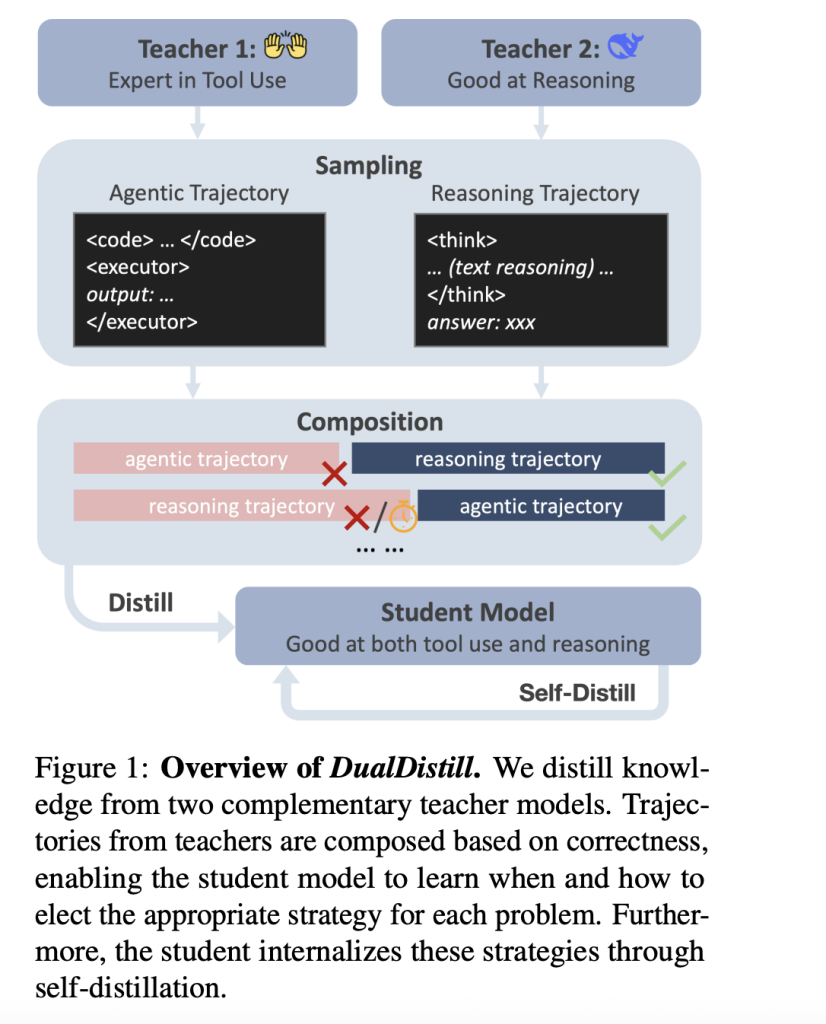

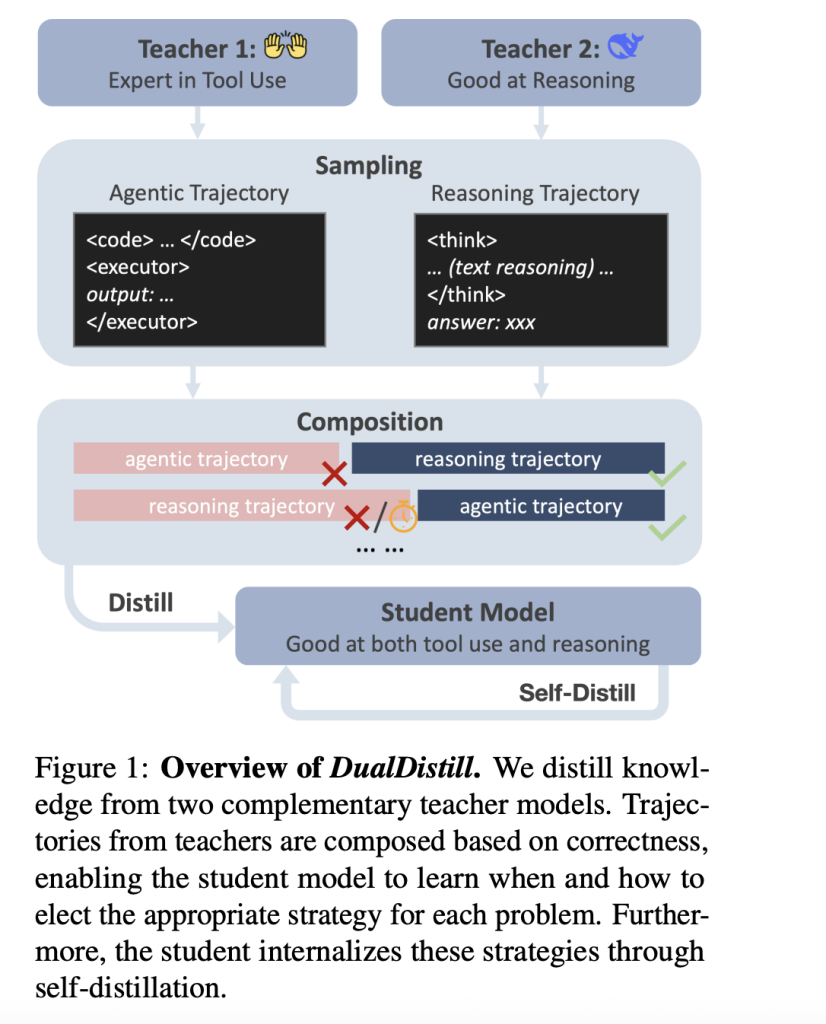

Researchers from Carnegie Mellon University have proposed DualDistill, a distillation framework that combines trajectories from two complementary teachers to create a unified student model. The framework utilizes one reasoning-oriented teacher and one tool-augmented teacher to develop Agentic-R1, a model that learns to select the most appropriate strategy for each problem type dynamically. Agentic-R1 executes code for arithmetic and algorithmic tasks while employing natural language reasoning for abstract problems. DualDistill utilizes trajectory composition to distill knowledge from both complementary teachers, followed by self-distillation. Moreover, researchers used OpenHands as the agentic reasoning teacher, and DeepSeek-R1 as the text-based reasoning teacher.

Evaluation and Benchmarks

The proposed method is evaluated across multiple benchmarks like DeepMath-L and Combinatorics300 to test various aspects of mathematical reasoning. It is compared against the baselines DeepSeek-R1-Distill and Qwen-2.5-Instruct. The student model, Agentic-R1, shows great performance improvements that benefit from both agentic and reasoning strategies. It outperforms two similarly sized models, each specializing in tool-assisted (Qwen2.5-7B-Instruct) or pure reasoning (Deepseek-R1-Distill7B) strategies. Agentic-R1 outperforms tool-based models by intelligently using reasoning strategies when required, while maintaining greater efficiency compared to pure reasoning models on standard mathematical tasks.

Qualitative Analysis and Tool Usage Patterns

Qualitative examples show that Agentic-R1 exhibits intelligent tool usage patterns, activating code execution tools in 79.2% of computationally demanding Combinatorics300 problems, while reducing activation to 52.0% for the simpler AMC dataset problems. Agentic-R1 learns to invoke tools appropriately through supervised fine-tuning alone, without explicit instruction, effectively balancing computational efficiency and reasoning accuracy.

Robustness to Imperfect Teachers

The framework remains effective even when guided by imperfect teachers. For instance, the agentic teacher achieves only 48.4% accuracy on Combinatorics300, yet the student model improved from 44.7% to 50.9%, ultimately outperforming the teacher.

Conclusion

In summary, the DualDistill framework effectively combines the strengths of natural language reasoning and tool-assisted problem solving by distilling complementary knowledge from two specialized teacher models into a single versatile student model, Agentic-R1. Through trajectory composition and self-distillation, Agentic-R1 learns to dynamically select the most appropriate strategy for each problem, balancing precision and computational efficiency. Evaluations across diverse mathematical reasoning benchmarks demonstrate that Agentic-R1 outperforms both pure reasoning and tool-based models, even when learning from imperfect teachers. This work highlights a promising approach to building adaptable AI agents capable of integrating heterogeneous problem-solving strategies for more robust and efficient reasoning.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project.

Meet the AI Dev Newsletter read by 40k+ Devs and Researchers from NVIDIA, OpenAI, DeepMind, Meta, Microsoft, JP Morgan Chase, Amgen, Aflac, Wells Fargo and 100s more [SUBSCRIBE NOW]

Sajjad Ansari is a final year undergraduate from IIT Kharagpur. As a Tech enthusiast, he delves into the practical applications of AI with a focus on understanding the impact of AI technologies and their real-world implications. He aims to articulate complex AI concepts in a clear and accessible manner.

Leave a comment